|

| SteamShards early alpha: Directional light, no shadows |

But voxel worlds -- especially sandbox voxel worlds, where any block anywhere can be added or removed at nearly any time by any player -- are entirely different than normal graphics programming. That's a lot of any. The rub is that the precalculated lighting techniques common for fixed level designs don't work too well when you've got tens of thousands of voxels on the screen. How much can you reliably recalculate whenever something changes? It's worse in a multiplayer world, too, where it's not just the player but any player out there changing stuff. And procedural terrain generation means you can't have an artist go in and place lights and sampling probes by hand.

This is the problem. How can you do effective lighting under such conditions? All geometry is effectively dynamic, and there's tons of it. All lights are effectively dynamic, and if you've seen Minecraft castles lit for night, you know there's tons of torches everywhere. Now you need code to handle what's normally beautified by an experienced, talented artist.

|

| With and without directional lighting |

Technique #2 is shadows. There's many different shadow techniques, and most of them are great when you have a few lights in the scene, or a few major lights. In outdoor worlds, sunlight is one of those. It's very common to see shadow maps used even in games where a lot of the geometry doesn't even cast shadows. You know World of Warcraft? Big game, right? Tons of great art and some fancy lighting effects -- but things like tables and chairs cast no shadows. Yet the players do, and obviously that's enough for them.

|

| Early Minecraft Lighting: Directional light + shadows |

The third screenshot (here on the right) is a very early build of Minecraft, which uses a simple lighting model that just has a directional light (the sun) plus voxel-based shadows. Those shadows give a lot more life to the game, compared to the first image at the top of the page.

There are some other texture tricks that one can add: bump maps, specularity maps, emissive textures, or extreme tricky stuff like ambient occlusion, depth-of-field, "god rays," bloom, and so on. I'm gonna skip most of these and stick to one, because of its relevance to voxel worlds: occlusion.

|

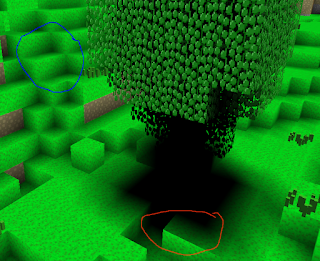

| Voxel Occlusion in SteamShards |

On a bright day here in real life, interior rooms still appear well-lit not because of direct sunlight but because what incoming sunlight there is (even none!) bounces around everywhere. Just having a window open to the sky can provide enough indirect light to effectively illuminate an entire room -- and even the next room over.

Occlusion is somewhat the reverse of radiance: it models where light isn't bouncing from. Corners are darker than the middle of walls because the adjacent walls (and floor or ceiling) block light that might otherwise come in. This final screenshot is another old screenshot from SteamShards, one with an early implementation of a shadowing algorithm. There's no directional lighting here -- everything is just shadows and occlusion. Each vertex looks at the four voxels in front of it. The more of them that are filled, the darker the vertex.

There's troubles once one adds in subvoxel shapes, however, and that's one of the issues that I'm currently addressing. The technique I'm using is Pretty Dang Messy™, but I'll write it up here when I'm happy with it!